AWS Certified AI Practitioner Exam Questions and Answers

A financial company is using ML to help with some of the company's tasks.

Which option is a use of generative AI models?

A company makes forecasts each quarter to decide how to optimize operations to meet expected demand. The company uses ML models to make these forecasts.

An AI practitioner is writing a report about the trained ML models to provide transparency and explainability to company stakeholders.

What should the AI practitioner include in the report to meet the transparency and explainability requirements?

A company is building a large language model (LLM) question answering chatbot. The company wants to decrease the number of actions call center employees need to take to respond to customer questions.

Which business objective should the company use to evaluate the effect of the LLM chatbot?

A social media company wants to use a large language model (LLM) for content moderation. The company wants to evaluate the LLM outputs for bias and potential discrimination against specific groups or individuals.

Which data source should the company use to evaluate the LLM outputs with the LEAST administrative effort?

Which AWS feature records details about ML instance data for governance and reporting?

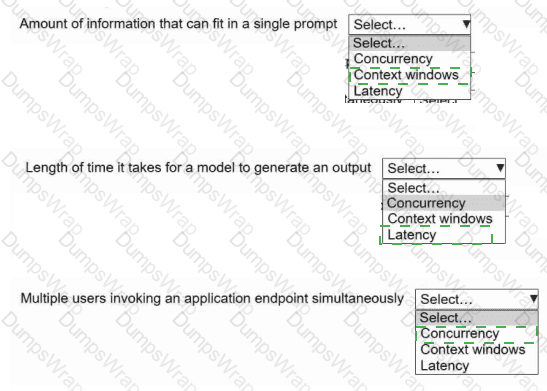

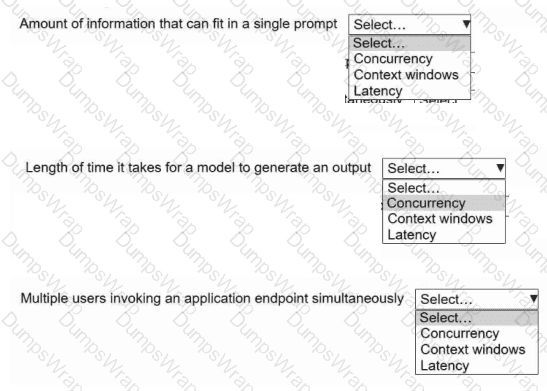

A company is building a generative Al application and is reviewing foundation models (FMs). The company needs to consider multiple FM characteristics.

Select the correct FM characteristic from the following list for each definition. Each FM characteristic should be selected one time. (Select THREE.)

Concurrency

Context windows

Latency

A company wants to create a new solution by using AWS Glue. The company has minimal programming experience with AWS Glue.

Which AWS service can help the company use AWS Glue?

A company wants to build an interactive application for children that generates new stories based on classic stories. The company wants to use Amazon Bedrock and needs to ensure that the results and topics are appropriate for children.

Which AWS service or feature will meet these requirements?

A company wants to create an application by using Amazon Bedrock. The company has a limited budget and prefers flexibility without long-term commitment.

Which Amazon Bedrock pricing model meets these requirements?

What does an F1 score measure in the context of foundation model (FM) performance?

A company is using a pre-trained large language model (LLM) to build a chatbot for product recommendations. The company needs the LLM outputs to be short and written in a specific language.

Which solution will align the LLM response quality with the company's expectations?

Which technique breaks a complex task into smaller subtasks that are sent sequentially to a large language model (LLM)?

A large retail bank wants to develop an ML system to help the risk management team decide on loan allocations for different demographics.

What must the bank do to develop an unbiased ML model?

An AI practitioner who has minimal ML knowledge wants to predict employee attrition without writing code. Which Amazon SageMaker feature meets this requirement?

A company is using a pre-trained large language model (LLM) to extract information from documents. The company noticed that a newer LLM from a different provider is available on Amazon Bedrock. The company wants to transition to the new LLM on Amazon Bedrock.

What does the company need to do to transition to the new LLM?

An AI practitioner is building a model to generate images of humans in various professions. The AI practitioner discovered that the input data is biased and that specific attributes affect the image generation and create bias in the model.

Which technique will solve the problem?

A company wants to develop a large language model (LLM) application by using Amazon Bedrock and customer data that is uploaded to Amazon S3. The company's security policy states that each team can access data for only the team's own customers.

Which solution will meet these requirements?

A company has documents that are missing some words because of a database error. The company wants to build an ML model that can suggest potential words to fill in the missing text.

Which type of model meets this requirement?

A pharmaceutical company wants to analyze user reviews of new medications and provide a concise overview for each medication. Which solution meets these requirements?

A food service company wants to develop an ML model to help decrease daily food waste and increase sales revenue. The company needs to continuously improve the model's accuracy.

Which solution meets these requirements?

A company trained an ML model on Amazon SageMaker to predict customer credit risk. The model shows 90% recall on training data and 40% recall on unseen testing data.

Which conclusion can the company draw from these results?

An AI practitioner must fine-tune an open source large language model (LLM) for text categorization. The dataset is already prepared.

Which solution will meet these requirements with the LEAST operational effort?

A company has built an image classification model to predict plant diseases from photos of plant leaves. The company wants to evaluate how many images the model classified correctly.

Which evaluation metric should the company use to measure the model's performance?

An AI practitioner needs to improve the accuracy of a natural language generation model. The model uses rapidly changing inventory data.

Which technique will improve the model's accuracy?

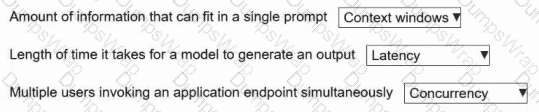

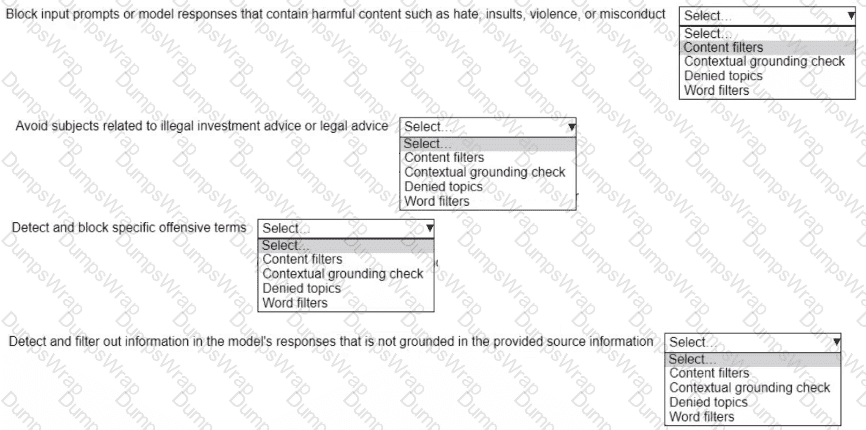

A company is using a generative AI model to develop a digital assistant. The model's responses occasionally include undesirable and potentially harmful content. Select the correct Amazon Bedrock filter policy from the following list for each mitigation action. Each filter policy should be selected one time. (Select FOUR.)

• Content filters

• Contextual grounding check

• Denied topics

• Word filters

A company wants to use language models to create an application for inference on edge devices. The inference must have the lowest latency possible.

Which solution will meet these requirements?

An ecommerce company wants to improve search engine recommendations by customizing the results for each user of the company's ecommerce platform. Which AWS service meets these requirements?

A hospital is developing an AI system to assist doctors in diagnosing diseases based on patient records and medical images. To comply with regulations, the sensitive patient data must not leave the country the data is located in.

Which data governance strategy will ensure compliance and protect patient privacy?

A company runs a website for users to make travel reservations. The company wants an AI solution to help create consistent branding for hotels on the website. The AI solution needs to generate hotel descriptions for the website in a consistent writing style. Which AWS service will meet these requirements?

A company wants to build a lead prioritization application for its employees to contact potential customers. The application must give employees the ability to view and adjust the weights assigned to different variables in the model based on domain knowledge and expertise.

Which ML model type meets these requirements?

A company has developed an ML model for image classification. The company wants to deploy the model to production so that a web application can use the model.

The company needs to implement a solution to host the model and serve predictions without managing any of the underlying infrastructure.

Which solution will meet these requirements?

Which statement presents an advantage of using Retrieval Augmented Generation (RAG) for natural language processing (NLP) tasks?

A company wants to use large language models (LLMs) with Amazon Bedrock to develop a chat interface for the company's product manuals. The manuals are stored as PDF files.

Which solution meets these requirements MOST cost-effectively?

A company created an AI voice model that is based on a popular presenter. The company is using the model to create advertisements. However, the presenter did not consent to the use of his voice for the model. The presenter demands that the company stop the advertisements.

Which challenge of working with generative AI does this scenario demonstrate?

A company has terabytes of data in a database that the company can use for business analysis. The company wants to build an AI-based application that can build a SQL query from input text that employees provide. The employees have minimal experience with technology.

Which solution meets these requirements?

A company uses a foundation model (FM) from Amazon Bedrock for an AI search tool. The company wants to fine-tune the model to be more accurate by using the company's data.

Which strategy will successfully fine-tune the model?

A publishing company built a Retrieval Augmented Generation (RAG) based solution to give its users the ability to interact with published content. New content is published daily. The company wants to provide a near real-time experience to users.

Which steps in the RAG pipeline should the company implement by using offline batch processing to meet these requirements? (Select TWO.)

A company wants to identify harmful language in the comments section of social media posts by using an ML model. The company will not use labeled data to train the model. Which strategy should the company use to identify harmful language?

Which AWS service or feature stores embeddings In a vector database for use with foundation models (FMs) and Retrieval Augmented Generation (RAG)?

A financial institution is using Amazon Bedrock to develop an AI application. The application is hosted in a VPC. To meet regulatory compliance standards, the VPC is not allowed access to any internet traffic.

Which AWS service or feature will meet these requirements?

Which strategy evaluates the accuracy of a foundation model (FM) that is used in image classification tasks?

A financial company uses a generative AI model to assign credit limits to new customers. The company wants to make the decision-making process of the model more transparent to its customers.

An online learning company with large volumes of educational materials wants to use enterprise search. Which AWS service meets these requirements?

Which term is an example of output vulnerability?

A medical company is customizing a foundation model (FM) for diagnostic purposes. The company needs the model to be transparent and explainable to meet regulatory requirements.

Which solution will meet these requirements?

A company is using domain-specific models. The company wants to avoid creating new models from the beginning. The company instead wants to adapt pre-trained models to create models for new, related tasks.

Which ML strategy meets these requirements?

A company wants to classify human genes into 20 categories based on gene characteristics. The company needs an ML algorithm to document how the inner mechanism of the model affects the output.

Which ML algorithm meets these requirements?

An ecommerce company is using a chatbot to automate the customer order submission process. The chatbot is powered by AI and Is available to customers directly from the company's website 24 hours a day, 7 days a week.

Which option is an AI system input vulnerability that the company needs to resolve before the chatbot is made available?

In which stage of the generative AI model lifecycle are tests performed to examine the model's accuracy?

A company wants to develop an educational game where users answer questions such as the following: "A jar contains six red, four green, and three yellow marbles. What is the probability of choosing a green marble from the jar?"

Which solution meets these requirements with the LEAST operational overhead?

A company has thousands of customer support interactions per day and wants to analyze these interactions to identify frequently asked questions and develop insights.

Which AWS service can the company use to meet this requirement?

A company has developed a generative text summarization application by using Amazon Bedrock. The company will use Amazon Bedrock automatic model evaluation capabilities.

Which metric should the company use to evaluate the accuracy of the model?

Which option is an example of unsupervised learning?

What does inference refer to in the context of AI?

A company has a generative AI application that uses a pre-trained foundation model (FM) on Amazon Bedrock. The company wants the FM to include more context by using company information.

Which solution meets these requirements MOST cost-effectively?

A company deployed an AI/ML solution to help customer service agents respond to frequently asked questions. The questions can change over time. The company wants to give customer service agents the ability to ask questions and receive automatically generated answers to common customer questions. Which strategy will meet these requirements MOST cost-effectively?

A research company implemented a chatbot by using a foundation model (FM) from Amazon Bedrock. The chatbot searches for answers to questions from a large database of research papers.

After multiple prompt engineering attempts, the company notices that the FM is performing poorly because of the complex scientific terms in the research papers.

How can the company improve the performance of the chatbot?

A company is building a contact center application and wants to gain insights from customer conversations. The company wants to analyze and extract key information from the audio of the customer calls.

Which solution meets these requirements?

A company is building a solution to generate images for protective eyewear. The solution must have high accuracy and must minimize the risk of incorrect annotations.

Which solution will meet these requirements?

An AI practitioner is using an Amazon Bedrock base model to summarize session chats from the customer service department. The AI practitioner wants to store invocation logs to monitor model input and output data.

Which strategy should the AI practitioner use?

An AI practitioner is developing a prompt for large language models (LLMs) in Amazon Bedrock. The AI practitioner must ensure that the prompt works across all Amazon Bedrock LLMs.

Which characteristic can differ across the LLMs?

A company uses Amazon SageMaker and various models fa Its AI workloads. The company needs to understand If Its AI workloads are ISO compliant.

Which AWS service or feature meets these requirements?

A financial company uses AWS to host its generative AI models. The company must generate reports to show adherence to international regulations for handling sensitive customer data.

Why does overfilting occur in ML models?

A company wants to keep its foundation model (FM) relevant by using the most recent data. The company wants to implement a model training strategy that includes regular updates to the FM.

Which solution meets these requirements?

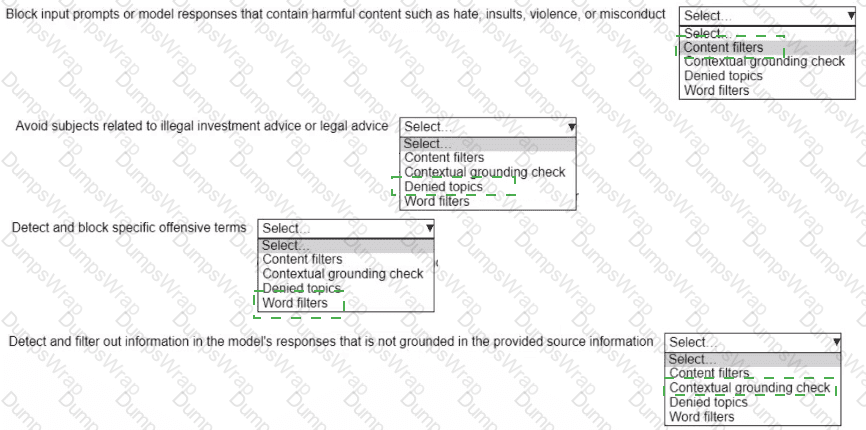

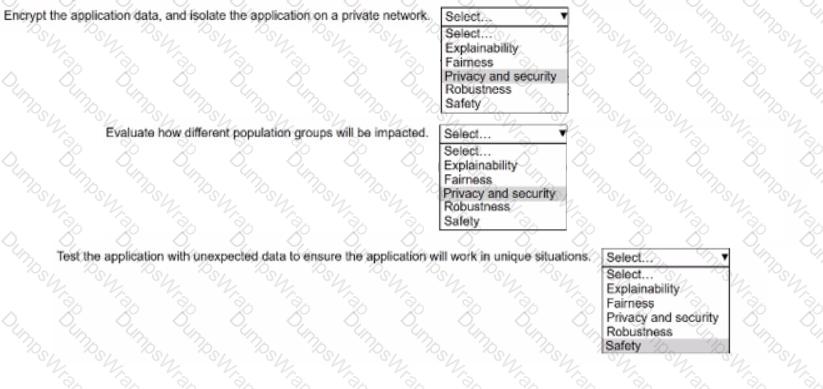

Which THREE of the following principles of responsible AI are most critical to this scenario? (Choose 3)

* Explainability

* Fairness

* Privacy and security

* Robustness

* Safety

A company wants to upload customer service email messages to Amazon S3 to develop a business analysis application. The messages sometimes contain sensitive data. The company wants to receive an alert every time sensitive information is found.

Which solution fully automates the sensitive information detection process with the LEAST development effort?

A retail company wants to build an ML model to recommend products to customers. The company wants to build the model based on responsible practices. Which practice should the company apply when collecting data to decrease model bias?

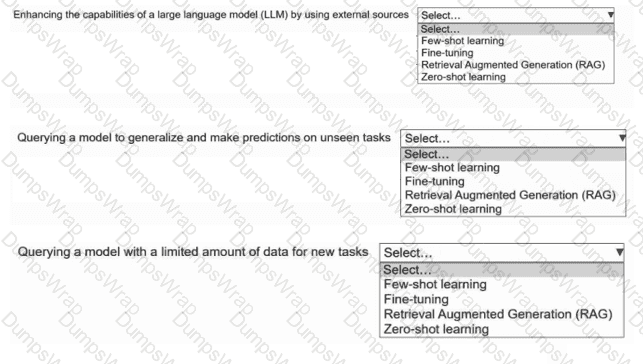

A company wants to improve multiple ML models.

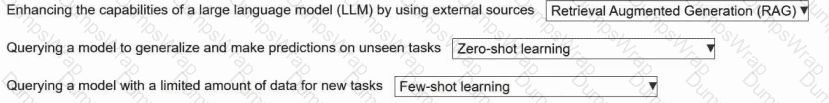

Select the correct technique from the following list of use cases. Each technique should be selected one time or not at all. (Select THREE.)

Few-shot learning

Fine-tuning

Retrieval Augmented Generation (RAG)

Zero-shot learning

A company is developing an editorial assistant application that uses generative AI. During the pilot phase, usage is low and application performance is not a concern. The company cannot predict application usage after the application is fully deployed and wants to minimize application costs.

Which solution will meet these requirements?

A company uses Amazon Comprehend to analyze customer feedback. A customer has several unique trained models. The company uses Comprehend to assign each model an endpoint. The company wants to automate a report on each endpoint that is not used for more than 15 days.

A company that uses multiple ML models wants to identify changes in original model quality so that the company can resolve any issues.

Which AWS service or feature meets these requirements?

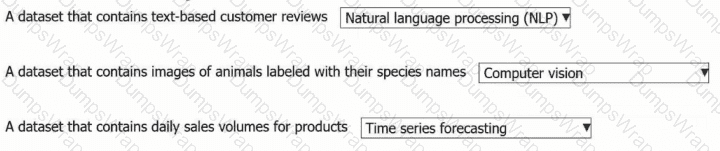

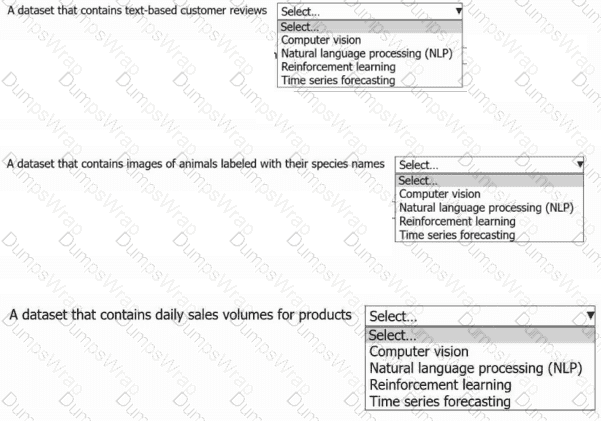

A company has multiple datasets that contain historical data. The company wants to use ML technologies to process each dataset.

Select the correct ML technology from the following list for each dataset. Select each ML technology one time or not at all. (Select THREE.)

Computer vision

Natural language processing (NLP)

Reinforcement learning

Time series forecasting

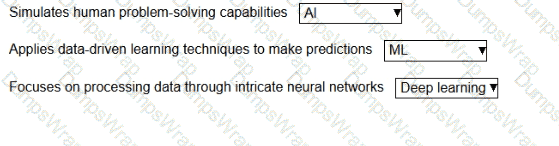

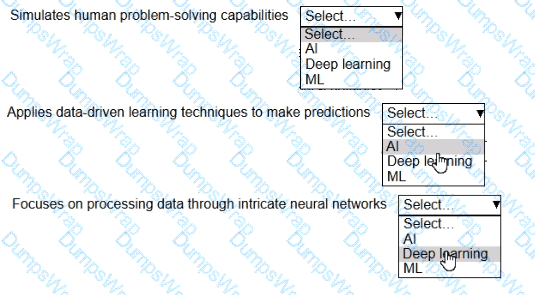

HOTSPOT

Select the correct AI term from the following list for each statement. Each AI term should be selected one time. (Select THREE.)

• AI

• Deep learning

• ML

A financial company is developing a generative AI application for loan approval decisions. The company needs the application output to be responsible and fair.

What does an F1 score measure in the context of foundation model (FM) performance?

A company wants to use a pre-trained generative AI model to generate content for its marketing campaigns. The company needs to ensure that the generated content aligns with the company's brand voice and messaging requirements.

Which solution meets these requirements?

A company built a deep learning model for object detection and deployed the model to production.

Which AI process occurs when the model analyzes a new image to identify objects?

An accounting firm wants to implement a large language model (LLM) to automate document processing. The firm must proceed responsibly to avoid potential harms.

What should the firm do when developing and deploying the LLM? (Select TWO.)

A company is developing a new model to predict the prices of specific items. The model performed well on the training dataset. When the company deployed the model to production, the model's performance decreased significantly.

What should the company do to mitigate this problem?

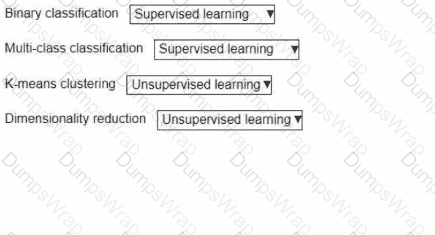

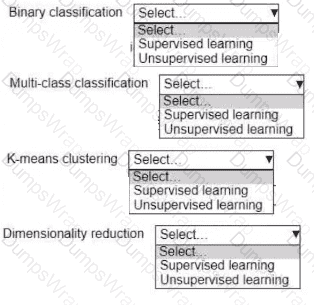

A company wants to develop ML applications to improve business operations and efficiency.

Select the correct ML paradigm from the following list for each use case. Each ML paradigm should be selected one or more times. (Select FOUR.)

• Supervised learning

• Unsupervised learning

Which feature of Amazon OpenSearch Service gives companies the ability to build vector database applications?