Google Cloud Certified - Professional Cloud Security Engineer Questions and Answers

Your company's storage team manages all product images within a specific Google Cloud project. To maintain control, you must isolate access to Cloud Storage for this project, allowing the storage team to manage restrictions at the project level. They must be restricted to using corporate computers. What should you do?

Your company is moving to Google Cloud. You plan to sync your users first by using Google Cloud Directory Sync (GCDS). Some employees have already created Google Cloud accounts by using their company email addresses that were created outside of GCDS. You must create your users on Cloud Identity.

What should you do?

Your Google Cloud organization allows for administrative capabilities to be distributed to each team through provision of a Google Cloud project with Owner role (roles/ owner). The organization contains thousands of Google Cloud Projects Security Command Center Premium has surfaced multiple cpen_myscl_port findings. You are enforcing the guardrails and need to prevent these types of common misconfigurations.

What should you do?

You have stored company approved compute images in a single Google Cloud project that is used as an image repository. This project is protected with VPC Service Controls and exists in the perimeter along with other projects in your organization. This lets other projects deploy images from the image repository project. A team requires deploying a third-party disk image that is stored in an external Google Cloud organization. You need to grant read access to the disk image so that it can be deployed into the perimeter.

What should you do?

You need to create a VPC that enables your security team to control network resources such as firewall rules. How should you configure the network to allow for separation of duties for network resources?

An organization receives an increasing number of phishing emails.

Which method should be used to protect employee credentials in this situation?

You are onboarding new users into Cloud Identity and discover that some users have created consumer user accounts using the corporate domain name. How should you manage these consumer user accounts with Cloud Identity?

Your organization needs to restrict the types of Google Cloud services that can be deployed within specific folders to enforce compliance requirements. You must apply these restrictions only to the designated folders, without affecting other parts of the resource hierarchy. You want to use the most efficient and simple method. What should you do?

Your organization enforces a custom organization policy that disables the use of Compute Engine VM instances with external IP addresses.1 However, a regulated business unit requires an exception to temporarily use external IPs for a third-party audit process. The regulated business workload must comply with least privilege principles and minimize policy drift. You need to ensure secure policy management and proper handling. What should you do?

Your organization is using Active Directory and wants to configure Security Assertion Markup Language (SAML). You must set up and enforce single sign-on (SSO) for all users.

What should you do?

Your organization uses Google Workspace Enterprise Edition tor authentication. You are concerned about employees leaving their laptops unattended for extended periods of time after authenticating into Google Cloud. You must prevent malicious people from using an employee's unattended laptop to modify their environment.

What should you do?

Your organization wants to be continuously evaluated against CIS Google Cloud Computing Foundations Benchmark v1 3 0 (CIS Google Cloud Foundation 1 3). Some of the controls are irrelevant to your organization and must be disregarded in evaluation. You need to create an automated system or process to ensure that only the relevant controls are evaluated.

What should you do?

Your organization is developing a sophisticated machine learning (ML) model to predict customer behavior for targeted marketing campaigns. The BigQuery dataset used for training includes sensitive personal information. You must design the security controls around the AI/ML pipeline. Data privacy must be maintained throughout the model's lifecycle and you must ensure that personal data is not used in the training process Additionally, you must restrict access to the dataset to an authorized subset of people only. What should you do?

Your organization has 3 TB of information in BigQuery and Cloud SQL. You need to develop a cost-effective, scalable, and secure strategy to anonymize the personally identifiable information (PII) that exists today. What should you do?

Your organization is moving virtual machines (VMs) to Google Cloud. You must ensure that operating system images that are used across your projects are trusted and meet your security requirements.

What should you do?

The CISO of your highly regulated organization has mandated that all AI applications running in production must be based on Google first-party models. Your security team has now implemented the Model Garden's organization policy meant to centrally control access and user actions on these approved models at the production folder level. However, it appears that someone has overwritten the policy. This has allowed developers to access third-party models on a particular production project. You need to resolve the issue with a solution that prevents a repeat occurrence. What should you do?

Your organization is using Google Workspace. Google Cloud, and a third-party SIEM. You need to export events such as user logins, successful logins, and failed logins to the SIEM. Logs need to be ingested in real time or near real-time. What should you do?

You are a consultant for an organization that is considering migrating their data from its private cloud to Google Cloud. The organization’s compliance team is not familiar with Google Cloud and needs guidance on how compliance requirements will be met on Google Cloud. One specific compliance requirement is for customer data at rest to reside within specific geographic boundaries. Which option should you recommend for the organization to meet their data residency requirements on Google Cloud?

An application running on a Compute Engine instance needs to read data from a Cloud Storage bucket. Your team does not allow Cloud Storage buckets to be globally readable and wants to ensure the principle of least privilege.

Which option meets the requirement of your team?

Your company recently published a security policy to minimize the usage of service account keys. On-premises Windows-based applications are interacting with Google Cloud APIs. You need to implement Workload Identity Federation (WIF) with your identity provider on-premises.

What should you do?

Your organization recently activated the Security Command Center {SCO standard tier. There are a few Cloud Storage buckets that were accidentally made accessible to the public. You need to investigate the impact of the incident and remediate it.

What should you do?

You perform a security assessment on a customer architecture and discover that multiple VMs have public IP addresses. After providing a recommendation to remove the public IP addresses, you are told those VMs need to communicate to external sites as part of the customer's typical operations. What should you recommend to reduce the need for public IP addresses in your customer's VMs?

You define central security controls in your Google Cloud environment for one of the folders in your organization you set an organizational policy to deny the assignment of external IP addresses to VMs. Two days later you receive an alert about a new VM with an external IP address under that folder.

What could have caused this alert?

Your company has recently enabled Security Command Center at the organization level. You need to implement runtime threat detection for applications running in containers within projects residing in the production folder. Specifically, you need to be notified if additional libraries are loaded or malicious scripts are executed within these running containers. You need to configure Security Command Center to meet this requirement while ensuring findings are visible within Security Command Center. What should you do?

You are setting up Cloud Identity for your company's Google Cloud organization. User accounts will be provisioned from Microsoft Entra ID through Directory Sync and there will be a single sign-on through Entra ID. You need to secure the super administrator accounts for the organization. Your solution must follow the principle of least privilege and implement strong authentication. What should you do?

Your team wants to centrally manage GCP IAM permissions from their on-premises Active Directory Service. Your team wants to manage permissions by AD group membership.

What should your team do to meet these requirements?

Your organization operates a hybrid cloud environment and has recently deployed a private Artifact Registry repository in Google Cloud. On-premises developers cannot resolve the Artifact Registry hostname and therefore cannot push or pull artifacts. You've verified the following:

Connectivity to Google Cloud is established by Cloud VPN or Cloud Interconnect.

No custom DNS configurations exist on-premises.

There is no route to the internet from the on-premises network.

You need to identify the cause and enable the developers to push and pull artifacts. What is likely causing the issue and what should you do to fix the issue?

You are responsible for managing your company’s identities in Google Cloud. Your company enforces 2-Step Verification (2SV) for all users. You need to reset a user’s access, but the user lost their second factor for 2SV. You want to minimize risk. What should you do?

Your company uses Google Cloud and has publicly exposed network assets. You want to discover the assets and perform a security audit on these assets by using a software tool in the least amount of time.

What should you do?

Your organization hosts a financial services application running on Compute Engine instances for a third-party company. The third-party company’s servers that will consume the application also run on Compute Engine in a separate Google Cloud organization. You need to configure a secure network connection between the Compute Engine instances. You have the following requirements:

The network connection must be encrypted.

The communication between servers must be over private IP addresses.

What should you do?

Last week, a company deployed a new App Engine application that writes logs to BigQuery. No other workloads are running in the project. You need to validate that all data written to BigQuery was done using the App Engine Default Service Account.

What should you do?

How should a customer reliably deliver Stackdriver logs from GCP to their on-premises SIEM system?

Your financial services company has an audit requirement under a strict regulatory framework that requires comprehensive, immutable audit trails for all administrative and data access activity that ensures that data is kept for seven years. Your current logging is fragmented across individual projects. You need to establish a centralized, tamper-proof, long-term logging solution accessible for audits. What should you do?

Your organization’s Google Cloud VMs are deployed via an instance template that configures them with a public IP address in order to host web services for external users. The VMs reside in a service project that is attached to a host (VPC) project containing one custom Shared VPC for the VMs. You have been asked to reduce the exposure of the VMs to the internet while continuing to service external users. You have already recreated the instance template without a public IP address configuration to launch the managed instance group (MIG). What should you do?

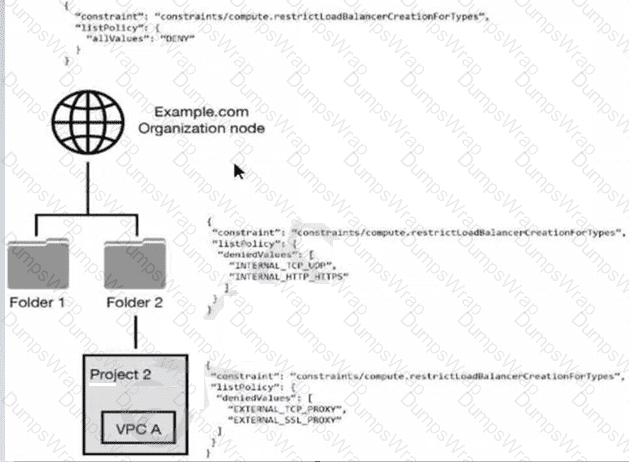

You have the following resource hierarchy. There is an organization policy at each node in the hierarchy as shown. Which load balancer types are denied in VPC A?

Your organization is rolling out a new continuous integration and delivery (CI/CD) process to deploy infrastructure and applications in Google Cloud Many teams will use their own instances of the CI/CD workflow It will run on Google Kubernetes Engine (GKE) The CI/CD pipelines must be designed to securely access Google Cloud APIs

What should you do?

Your security team wants to implement a defense-in-depth approach to protect sensitive data stored in a Cloud Storage bucket. Your team has the following requirements:

The Cloud Storage bucket in Project A can only be readable from Project B.

The Cloud Storage bucket in Project A cannot be accessed from outside the network.

Data in the Cloud Storage bucket cannot be copied to an external Cloud Storage bucket.

What should the security team do?

You want to limit the images that can be used as the source for boot disks. These images will be stored in a dedicated project.

What should you do?

Your global defense company is migrating top-secret classified data to BigQuery and Cloud Storage. National security regulations demand that master encryption key material never leaves the accredited on-premises cryptographic hardware. You must retain the unilateral ability to revoke data access, independent of any cloud provider. What should you do?

Your company is developing a new application for your organization. The application consists of two Cloud Run services, service A and service B. Service A provides a web-based user front-end. Service B provides back-end services that are called by service A. You need to set up identity and access management for the application. Your solution should follow the principle of least privilege. What should you do?

Your Google Cloud environment has one organization node, one folder named Apps." and several projects within that folder The organizational node enforces the constraints/iam.allowedPolicyMemberDomains organization policy, which allows members from the terramearth.com organization The "Apps" folder enforces the constraints/iam.allowedPolicyMemberDomains organization policy, which allows members from the flowlogistic.com organization. It also has the inheritFromParent: false property.

You attempt to grant access to a project in the Apps folder to the user testuser@terramearth.com.

What is the result of your action and why?

An organization is starting to move its infrastructure from its on-premises environment to Google Cloud Platform (GCP). The first step the organization wants to take is to migrate its current data backup and disaster recovery solutions to GCP for later analysis. The organization’s production environment will remain on- premises for an indefinite time. The organization wants a scalable and cost-efficient solution.

Which GCP solution should the organization use?

You have created an OS image that is hardened per your organization’s security standards and is being stored in a project managed by the security team. As a Google Cloud administrator, you need to make sure all VMs in your Google Cloud organization can only use that specific OS image while minimizing operational overhead. What should you do? (Choose two.)

While migrating your organization’s infrastructure to GCP, a large number of users will need to access GCP Console. The Identity Management team already has a well-established way to manage your users and want to keep using your existing Active Directory or LDAP server along with the existing SSO password.

What should you do?

You are setting up a CI/CD pipeline to deploy containerized applications to your production clusters on Google Kubernetes Engine (GKE). You need to prevent containers with known vulnerabilities from being deployed. You have the following requirements for your solution:

Must be cloud-native

Must be cost-efficient

Minimize operational overhead

How should you accomplish this? (Choose two.)

Your organization has an operational image classification model running on a managed AI service on Google Cloud. You are in a configuration review with stakeholders and must describe the security responsibilities for the image classification model. What should you do?

Your organization leverages folders to represent different teams within your Google Cloud environment. To support Infrastructure as Code (IaC) practices, each team receives a dedicated service account upon onboarding. You want to ensure that teams have comprehensive permissions to manage resources within their assigned folders while adhering to the principle of least privilege. You must design the permissions for these team-based service accounts in the most effective way possible. What should you do?

You are exporting application logs to Cloud Storage. You encounter an error message that the log sinks don't support uniform bucket-level access policies. How should you resolve this error?

Your application is deployed as a highly available cross-region solution behind a global external HTTP(S) load balancer. You notice significant spikes in traffic from multiple IP addresses but it is unknown whether the IPs are malicious. You are concerned about your application's availability. You want to limit traffic from these clients over a specified time interval.

What should you do?

You are the project owner for a regulated workload that runs in a project you own and manage as an Identity and Access Management (IAM) admin. For an upcoming audit, you need to provide access reviews evidence. Which tool should you use?

All logs in your organization are aggregated into a centralized Google Cloud logging project for analysis and long-term retention.4 While most of the log data can be viewed by operations teams, there are specific sensitive fields (i.e., protoPayload.authenticationinfo.principalEmail) that contain identifiable information that should be restricted only to security teams. You need to implement a solution that allows different teams to view their respective application logs in the centralized logging project. It must also restrict access to specific sensitive fields within those logs to only a designated security group. Your solution must ensure that other fields in the same log entry remain visible to other authorized groups. What should you do?

An organization is moving applications to Google Cloud while maintaining a few mission-critical applications on-premises. The organization must transfer the data at a bandwidth of at least 50 Gbps. What should they use to ensure secure continued connectivity between sites?

Which Identity-Aware Proxy role should you grant to an Identity and Access Management (IAM) user to access HTTPS resources?

You are auditing all your Google Cloud resources in the production project. You want to identity all principals who can change firewall rules.

What should you do?

Which type of load balancer should you use to maintain client IP by default while using the standard network tier?

Your organization operates in a highly regulated industry and uses multiple Google Cloud services. You need to identify potential risks to regulatory compliance. Which situation introduces the greatest risk?

You need to set up two network segments: one with an untrusted subnet and the other with a trusted subnet. You want to configure a virtual appliance such as a next-generation firewall (NGFW) to inspect all traffic between the two network segments. How should you design the network to inspect the traffic?

A business unit at a multinational corporation signs up for GCP and starts moving workloads into GCP. The business unit creates a Cloud Identity domain with an organizational resource that has hundreds of projects.

Your team becomes aware of this and wants to take over managing permissions and auditing the domain resources.

Which type of access should your team grant to meet this requirement?

Your company is migrating a customer database that contains personally identifiable information (PII) to Google Cloud. To prevent accidental exposure, this data must be protected at rest. You need to ensure that all PII is automatically discovered and redacted, or pseudonymized, before any type of analysis. What should you do?

Your organization has an application hosted in Cloud Run. You must control access to the application by using Cloud Identity-Aware Proxy (IAP) with these requirements:

Only users from the AppDev group may have access.

Access must be restricted to internal network IP addresses.

What should you do?

As adoption of the Cloud Data Loss Prevention (DLP) API grows within the company, you need to optimize usage to reduce cost. DLP target data is stored in Cloud Storage and BigQuery. The location and region are identified as a suffix in the resource name.

Which cost reduction options should you recommend?

You are creating a new infrastructure CI/CD pipeline to deploy hundreds of ephemeral projects in your Google Cloud organization to enable your users to interact with Google Cloud. You want to restrict the use of the default networks in your organization while following Google-recommended best practices. What should you do?

A customer wants to make it convenient for their mobile workforce to access a CRM web interface that is hosted on Google Cloud Platform (GCP). The CRM can only be accessed by someone on the corporate network. The customer wants to make it available over the internet. Your team requires an authentication layer in front of the application that supports two-factor authentication

Which GCP product should the customer implement to meet these requirements?

You are part of a security team investigating a compromised service account key. You need to audit which new resources were created by the service account.

What should you do?

Your organization must comply with the regulation to keep instance logging data within Europe. Your workloads will be hosted in the Netherlands in region europe-west4 in a new project. You must configure Cloud Logging to keep your data in the country.

What should you do?

Your organization operates in a highly regulated environment and has a stringent set of compliance requirements for protecting customer data. You must encrypt data while in use to meet regulations. What should you do?

Your organization previously stored files in Cloud Storage by using Google Managed Encryption Keys (GMEK). but has recently updated the internal policy to require Customer Managed Encryption Keys (CMEK). You need to re-encrypt the files quickly and efficiently with minimal cost.

What should you do?

A company has redundant mail servers in different Google Cloud Platform regions and wants to route customers to the nearest mail server based on location.

How should the company accomplish this?

You want to use the gcloud command-line tool to authenticate using a third-party single sign-on (SSO) SAML identity provider. Which options are necessary to ensure that authentication is supported by the third-party identity provider (IdP)? (Choose two.)

Your organization develops software involved in many open source projects and is concerned about software supply chain threats You need to deliver provenance for the build to demonstrate the software is untampered.

What should you do?

A company migrated their entire data/center to Google Cloud Platform. It is running thousands of instances across multiple projects managed by different departments. You want to have a historical record of what was running in Google Cloud Platform at any point in time.

What should you do?

Your organization is migrating its primary web application from on-premises to Google Kubernetes Engine (GKE). You must advise the development team on how to grant their applications access to Google Cloud services from within GKE according to security recommended practices. What should you do?

A company allows every employee to use Google Cloud Platform. Each department has a Google Group, with

all department members as group members. If a department member creates a new project, all members of that department should automatically have read-only access to all new project resources. Members of any other department should not have access to the project. You need to configure this behavior.

What should you do to meet these requirements?

Your organization has had a few recent DDoS attacks. You need to authenticate responses to domain name lookups. Which Google Cloud service should you use?

Your organization is worried about recent news headlines regarding application vulnerabilities in production applications that have led to security breaches. You want to automatically scan your deployment pipeline for vulnerabilities and ensure only scanned and verified containers can run in the environment. What should you do?

An office manager at your small startup company is responsible for matching payments to invoices and creating billing alerts. For compliance reasons, the office manager is only permitted to have the Identity and Access Management (IAM) permissions necessary for these tasks. Which two IAM roles should the office manager have? (Choose two.)

You are a security administrator at your company. Per Google-recommended best practices, you implemented the domain restricted sharing organization policy to allow only required domains to access your projects. An engineering team is now reporting that users at an external partner outside your organization domain cannot be granted access to the resources in a project. How should you make an exception for your partner's domain while following the stated best practices?

A customer deployed an application on Compute Engine that takes advantage of the elastic nature of cloud computing.

How can you work with Infrastructure Operations Engineers to best ensure that Windows Compute Engine VMs are up to date with all the latest OS patches?

Your team wants to make sure Compute Engine instances running in your production project do not have public IP addresses. The frontend application Compute Engine instances will require public IPs. The product engineers have the Editor role to modify resources. Your team wants to enforce this requirement.

How should your team meet these requirements?

You work for a healthcare provider that is expanding into the cloud to store and process sensitive patient data. You must ensure the chosen Google Cloud configuration meets these strict regulatory requirements:

Data must reside within specific geographic regions.

Certain administrative actions on patient data require explicit approval from designated compliance officers.

Access to patient data must be auditable.

What should you do?

You work for a large organization that recently implemented a 100GB Cloud Interconnect connection between your Google Cloud and your on-premises edge router. While routinely checking the connectivity, you noticed that the connection is operational but there is an error message that indicates MACsec is operationally down. You need to resolve this error. What should you do?

Users are reporting an outage on your public-facing application that is hosted on Compute Engine. You suspect that a recent change to your firewall rules is responsible. You need to test whether your firewall rules are working properly. What should you do?

Your company has multiple teams needing access to specific datasets across various Google Cloud data services for different projects. You need to ensure that team members can only access the data relevant to their projects and prevent unauthorized access to sensitive information within BigQuery, Cloud Storage, and Cloud SQL. What should you do?

A customer needs to launch a 3-tier internal web application on Google Cloud Platform (GCP). The customer’s internal compliance requirements dictate that end-user access may only be allowed if the traffic seems to originate from a specific known good CIDR. The customer accepts the risk that their application will only have SYN flood DDoS protection. They want to use GCP’s native SYN flood protection.

Which product should be used to meet these requirements?

You are a security engineer at a finance company. Your organization plans to store data on Google Cloud, but your leadership team is worried about the security of their highly sensitive data Specifically, your

company is concerned about internal Google employees' ability to access your company's data on Google Cloud. What solution should you propose?

A customer terminates an engineer and needs to make sure the engineer's Google account is automatically deprovisioned.

What should the customer do?

Your organization operates Virtual Machines (VMs) with only private IPs in the Virtual Private Cloud (VPC) with internet access through Cloud NAT Everyday, you must patch all VMs with critical OS updates and provide summary reports

What should you do?

You are deploying regulated workloads on Google Cloud. The regulation has data residency and data access requirements. It also requires that support is provided from the same geographical location as where the data resides.

What should you do?

A customer has 300 engineers. The company wants to grant different levels of access and efficiently manage IAM permissions between users in the development and production environment projects.

Which two steps should the company take to meet these requirements? (Choose two.)

In an effort for your company messaging app to comply with FIPS 140-2, a decision was made to use GCP compute and network services. The messaging app architecture includes a Managed Instance Group (MIG) that controls a cluster of Compute Engine instances. The instances use Local SSDs for data caching and UDP for instance-to-instance communications. The app development team is willing to make any changes necessary to comply with the standard

Which options should you recommend to meet the requirements?

You are a Cloud Identity administrator for your organization. In your Google Cloud environment groups are used to manage user permissions. Each application team has a dedicated group Your team is responsible for creating these groups and the application teams can manage the team members on their own through the Google Cloud console. You must ensure that the application teams can only add users from within your organization to their groups.

What should you do?

Your organization acquired a new workload. The Web and Application (App) servers will be running on Compute Engine in a newly created custom VPC. You are responsible for configuring a secure network communication solution that meets the following requirements:

Only allows communication between the Web and App tiers.

Enforces consistent network security when autoscaling the Web and App tiers.

Prevents Compute Engine Instance Admins from altering network traffic.

What should you do?

A customer is running an analytics workload on Google Cloud Platform (GCP) where Compute Engine instances are accessing data stored on Cloud Storage. Your team wants to make sure that this workload will not be able to access, or be accessed from, the internet.

Which two strategies should your team use to meet these requirements? (Choose two.)