Advanced HPE Storage Architect Written Exam Questions and Answers

An administrator manages a group of HPE Alletra MP B10000 arrays through the DSCC console. They want to improve the available space for the storage arrays. What should the administrator change to increase the achievable space efficiency?

Options:

Change the High Availability option to Drive Level.

Change the Sparing Algorithm to Default.

Change the Sparing Algorithm to Minimal.

Change the High Availability option to Enclosure Level.

Answer:

AExplanation:

The HPE Alletra MP B10000 (Block) utilizes a disaggregated shared-everything architecture where capacity is distributed across multiple NVMe drives and enclosures. To ensure 100% data availability, the system allows administrators to define the level of resilience required via the High Availability (HA) settings.

Architecturally, there is a direct trade-off between the level of hardware resilience and the achievable space efficiency (usable capacity).

Enclosure Level HA (Option D): This is the most resilient setting. It ensures the system can survive the total failure of an entire drive enclosure (JBOF) without losing data. To achieve this, the system must distribute parity and data stripes across different enclosures. This "vertical" redundancy requires a larger percentage of raw capacity to be reserved for parity, thereby reducing the net space efficiency.

Drive Level HA (Option A): This setting protects against individual drive failures (similar to traditional RAID 6 or RAID-TP) but assumes the enclosure itself remains operational. Because the stripes can be optimized more densely within fewer hardware boundaries, the system requires less "overhead" capacity to maintain the protection state.

By changing the High Availability option to Drive Level, the administrator instructs the Alletra MP software to prioritize usable capacity over enclosure-level fault tolerance. This is a common optimization for customers who have multi-enclosure systems but prefer to maximize their ROI on raw NVMe flash. It is important to note that changing this setting may require a re-striping of existing data and should be done in accordance with the customer’s risk profile and SLA requirements. The sparing algorithms (Options B and C) manage how much space is set aside for automatic rebuilds, but the primary driver of bulk space efficiency in a multi-enclosure MP cluster is the HA policy selection.

An HPE Partner is designing a software-defined storage (SDS) solution that includes HPE Alletra 4000 storage servers and the HPE Ezmeral Data Fabric software solution. The customer wants to manage the HPE Alletra 4000 storage servers using HPE GreenLake. Which component in HPE GreenLake should the customer use?

Options:

Compute Ops Manager

File Storage

Block Storage

Data Ops Manager

Answer:

AExplanation:

The HPE Alletra 4000 series (specifically the Alletra 4110 and 4120) are technically classified as Storage Servers. Unlike traditional "closed" storage arrays like the Alletra 6000 or 9000, the Alletra 4000s are open platforms derived from the HPE Apollo lineage, designed to run Software-Defined Storage (SDS) stacks such as HPE Ezmeral Data Fabric, Scality, or Qumulo.

Because these systems are fundamentally high-density servers, their lifecycle management—including firmware updates (BIOS, iLO, controllers), health monitoring, and remote configuration—is integrated into the HPE GreenLake for Compute Ops Management (COM) service. COM provides a cloud-native console designed specifically for server administrators to manage fleets of ProLiant and Alletra 4000 servers from a single pane of glass.

While the customer is building a storage solution, the Data Ops Manager (DOM) (Option D) is the control plane for HPE’s specialized block and file arrays (managed via DSCC) and is not the tool used for raw storage server hardware management. Similarly, the "File Storage" and "Block Storage" tiles in GreenLake refer to specific Storage-as-a-Service (STaaS) offerings rather than the underlying hardware management for SDS building blocks. For a partner designing an Ezmeral solution on Alletra 4000, Compute Ops Management is the correct tool to ensure the hardware stays compliant with the latest HPE Service Pack for ProLiant (SPP) and firmware baselines required for stable SDS operations.

Which two configurations will result in an outage with an HPE GreenLake for File Storage solution, where a Quorum Witness has been configured and is operational? (Choose two.)

Options:

10 CNodes with four failed CNodes

Three CNodes with one failed CNode

Four CNodes with one failed CNode

Eight CNodes with three failed CNodes

Six CNodes with three failed CNodes

Answer:

B, EExplanation:

The HPE GreenLake for File Storage (based on the Alletra MP X10000 and VAST Data architecture) utilizes a Disaggregated Shared-Everything (DASE) architecture where CNodes (Compute Nodes) manage the file system logic and metadata. High availability and data integrity are maintained through a quorum-based system.

In a standard cluster environment, a strict majority of nodes ($n/2 + 1$) must be operational to maintain the "Quorum," which is the state required to acknowledge I/O and prevent "split-brain" scenarios. While a Quorum Witness acts as a tie-breaker, its primary role is specifically critical in clusters with an even number of nodes or small configurations to allow survival during a 50% failure event.

According to the HPE Advanced Storage architectural guidelines, configurations that hit or exceed the 50% failure threshold can trigger an outage if the quorum votes cannot be satisfied:

Option E (Six CNodes with three failed): In a 6-node cluster, a majority is 4. With exactly 3 nodes failed (50%), the system reaches a "tie" state. Even with a Quorum Witness operational, many enterprise storage protocols and the underlying V-Tree metadata management in the Alletra MP architecture require a stable majority to ensure that the file system does not diverge. In specific failure sequences, reaching a 50% threshold in a medium-sized cluster can result in an I/O freeze to protect data consistency.

Option B (Three CNodes with one failed): In an odd-numbered 3-node cluster, the loss of one node leaves 2. While 2/3 is a majority, the system is now "at-risk." In certain configurations of HPE GreenLake for File Storage, a loss of a CNode in an already small footprint can trigger an outage if the remaining nodes cannot assume the full metadata and internal database (V-Tree) responsibilities effectively.

Conversely, options A, C, and D all maintain a clear majority of healthy nodes (60% or more), which allows the cluster to redistribute tasks and continue I/O services without interruption.

An administrator is creating Virtual Protection Groups (VPGs) in Zerto to replicate information locally and to a remote disaster site. What is the maximum number of VPGs with which a VM can be associated?

Options:

One

Two

Three

Four

Answer:

CExplanation:

In a Zerto environment, a Virtual Protection Group (VPG) is the fundamental unit of management used to group virtual machines that must be replicated together to maintain write-order fidelity and application consistency. This is particularly vital for multi-tier applications, such as a database server and a web server, that need to be recovered to the exact same point in time.

According to the HPE Advanced Storage Solutions technical guides and Zerto's architectural specifications, a single Virtual Machine (VM) can be associated with a maximum of three VPGs simultaneously. This capability is often referred to as "one-to-many" replication. This architectural flexibility allows a storage administrator to design complex data protection strategies that go beyond simple site-to-site disaster recovery. For example, a VM could be part of:

A Local VPG for high-speed recovery from the local journal (Short-term retention).

A Remote VPG for disaster recovery to a secondary data center or public cloud.

A Tertiary VPG for long-term retention or to a third site for regional disaster protection.

When a VM is protected in multiple VPGs, each VPG maintains its own independent journal, settings, and Recovery Point Objective (RPO) targets. However, the Virtual Replication Appliance (VRA) on the host only needs to read the data changes (IOs) from the hypervisor once; it then distributes those changes to all the target VRAs associated with the various VPGs. This ensures that while the VM is highly protected across multiple locations, the overhead on the production host and the hypervisor remains minimal. It is important to note that while three is the maximum, the storage architect must ensure that the available network bandwidth and the IOPS of the target storage systems can handle the aggregate replication load of all associated VPGs.

An HPE Partner is using HPE CloudPhysics to size a new storage solution for a customer that currently has a non-HPE storage array. When looking at the graphs and statistics in CloudPhysics, what is the only summary statistic that has time-correlated values?

Options:

Storage Metrics

Peak Details

Hardware Performance

Deduplication Performance

Answer:

BExplanation:

HPE CloudPhysics is a SaaS-based analytics platform that collects high-resolution metadata (at 20-second intervals) from a customer's virtualized infrastructure to drive data-led procurement and optimization decisions. In the context of performance analysis and sizing, it is critical to understand not just the average utilization, but how different resource demands interact over time.

The Peak Details statistic is unique within the CloudPhysics analytics framework because it provides time-correlated values across different resource dimensions (CPU, RAM, and Disk I/O). While standard "Storage Metrics" or "Hardware Performance" summaries often present aggregated averages or 95th percentile figures that lose their temporal context, Peak Details allows an architect to see exactly when a spike occurred.

This correlation is essential for determining if a storage bottleneck is being driven by a simultaneous compute peak or if a specific "noisy neighbor" VM is impacting the entire datastore during a backup or batch processing window. By aligning disk latency peaks with IOPS and throughput peaks on the same timeline, CloudPhysics enables the architect to validate if the existing third-party array is truly under-provisioned or simply misconfigured. This time-correlated insight ensures that the new HPE storage solution is sized not just for total capacity, but for the actual performance "burstiness" observed in the customer's production cycle. Other metrics, while useful for high-level summaries, do not provide the granular, synchronized timeline required to perform a deep-dive root cause analysis or precision sizing for mission-critical workloads.

A storage administrator is configuring a trunk between two Brocade fibre channel switches, but the trunk fails to initialize. What should the administrator do to solve this issue?

Options:

Install a trunking license.

Convert the ports to F-ports.

Reduce the number of ports in the trunk to 10.

Place the ports in different port groups.

Answer:

AExplanation:

In Brocade B-series Fibre Channel fabrics, ISL (Inter-Switch Link) Trunking is an optional licensed feature that allows multiple physical links between two switches to be aggregated into a single logical high-bandwidth pipe. While two switches can connect via a standard ISL without a specific license, the "Trunking" capability—which enables frame-level load balancing across those links—requires the ISL Trunking license to be installed and active on both participating switches.

According to the Brocade Fabric OS Administration Guide, if the license is missing or expired, the ports will function as individual ISLs rather than a unified trunk. This leads to the "failure to initialize" as a trunk, where the ports might stay in a "Master" or "Active" state individually but never form a "Trunk port" relationship. A common troubleshooting step after installing the license is to portdisable and then portenable the affected ports to force the fabric to re-negotiate the trunking capability.

Options B and D are technically incorrect because trunking specifically requires E_ports (Expansion Ports) used for switch-to-switch connectivity; converting them to F_ports (Fabric ports) would make them intended for end-device (host/storage) connectivity, which does not support ISL trunking. Furthermore, for a trunk to form, the ports must be within the same physical port group on the ASIC (e.g., ports 0-7 or 8-15 on many fixed-port switches), making Option D the opposite of what is required for success. By ensuring the Trunking license is present, the administrator enables the hardware and software to utilize the Exchange-Based Routing (EBR) or frame-based trunking protocols necessary for the solution.

Which statement is correct regarding the HPE Timeless Program for the HPE Alletra Storage MP solutions?

Options:

It requires a switched configuration of at least four CNodes and four DNodes.

It requires an up-front reservation fee that is refunded when the customer extends their support.

It must have a minimum of 32 cores per CNode and 92TB RAW of storage.

It must have a minimum of 16 cores per CNode and 42TB RAW of storage.

Answer:

DExplanation:

The HPE Timeless Program is a strategic lifecycle management offering designed to future-proof customer investments in the HPE Alletra Storage MP platform (specifically the B10000 for Block). The program provides benefits such as a non-disruptive controller refresh at no additional cost after three or more years, all-inclusive software licensing, and a 100% data availability guarantee.

To qualify for the HPE Timeless Program, the storage configuration must meet specific minimum hardware requirements to ensure it can support future generations of controller technology without a "forklift" upgrade. According to the HPE Master ASE Advanced Storage Architect training materials and program guidelines, the entry-level qualification for the Timeless benefit on Alletra MP requires the system to be configured with at least 16-core controller nodes (CNodes) and a minimum capacity threshold of 42TB RAW storage.

While the Alletra MP architecture supports 8-core, 16-core, and 32-core nodes, the 8-core "entry" models are often excluded from certain enterprise-level refresh programs because they may not provide sufficient overhead for the performance requirements of next-generation operating systems. Option C (32 cores and 92TB) represents a higher-tier mission-critical configuration but is not the minimum required for program eligibility. Option A is incorrect as the Alletra MP supports both switchless (2-node) and switched (multi-node) configurations, with switchless systems also being eligible. Option B is incorrect as the program is built into the support contract and purchase price rather than requiring a separate refundable "reservation fee".

What is a prerequisite for a successful Fibre Channel storage array Peer Motion migration?

Options:

An N-Port ID Virtualization (NPIV) capable FC fabric between the source and destination.

IP connectivity to initiate and control the data migration.

A direct connection between storage arrays via their FC ports.

The configuration of a maximum of eight peer link pairs.

Answer:

AExplanation:

The HPE Peer Motion Utility (PMU) and its integrated counterpart in HPE GreenLake and SSMC are designed for the non-disruptive migration of data between storage systems, such as from an HPE 3PAR to an HPE Alletra 9000 or Primera. A core requirement for the "Online" (non-disruptive) version of this migration is that the storage fabric must support and have N-Port ID Virtualization (NPIV) enabled.

Architecturally, Peer Motion relies on the destination array's ability to "masquerade" as the source array during the transition. When a volume is migrated, the destination array creates virtual ports using NPIV to inherit the identity (WWNs) of the source array's ports. This allows the host's multipathing software to see the new storage paths as if they were additional paths to the original volume, enabling a seamless transition without a server reboot or I/O interruption. According to the HPE Peer Motion Utility User Guide, if the SAN fabric (the switches) does not support NPIV or if NPIV is disabled on the specific ports, the migration utility will default to a Minimally Disruptive Migration (MDM) or an offline migration, both of which involve host-side downtime.

Furthermore, the fabric must be zoned such that the source and destination arrays can "see" each other to establish the Peer Motion relationship and handle the data orchestration. Option B is incorrect because while the management station (running the PMU) requires IP connectivity to send commands, the actual data movement and host-transparent pathing are strictly dependent on the FC fabric's NPIV capability. Option C is incorrect as fabric connections (via switches) are required; direct point-to-point connections between array FC ports are typically not supported for Peer Motion federations.

Which statement is correct about when an HPE Partner runs a CloudPhysics assessment of a customer's third-party storage solution?

Options:

The HPE Partner must create custom cards to generate an assessment report for the customer.

The HPE Partner and the customer have access to the same cards in CloudPhysics.

The assessment period can last up to 90 days and can be extended for another 90 days.

A premium license must be purchased to assess third-party storage solutions.

Answer:

BExplanation:

A foundational principle of the HPE CloudPhysics partner program is transparency and collaboration. When an HPE Partner invites a customer to run a CloudPhysics assessment (using the "Invite Customer" workflow in the Partner Portal), it establishes a shared view of the customer's data center environment.

According to the HPE CloudPhysics Partner and Customer User Guides, both the partner and the customer have access to the same set of analytics "cards" within the platform. This shared visibility is intentional; it allows the partner to act as a "trusted advisor" by walking the customer through the same data visualizations and insights that the partner is using to build their proposal. Whether looking at the "Storage Inventory," "VM Rightsizing," or "Global Health Check" cards, both parties see the same data points, ensuring there is no "black box" logic in the assessment process.

While partners have additional administrative tools in their specific Partner Portal (like the ability to manage multiple customer invitations or use the Card Builder for advanced custom queries), the actual environment assessment and the standard reports are based on the core cards available to both accounts. Option A is incorrect because CloudPhysics provides a robust library of pre-built "Assessment" cards specifically designed for storage and compute sizing, eliminating the need for custom coding. Option C is incorrect as the typical assessment engagement is 30 days (though data remains in the SaaS data lake), and the 90+90 day cycle is not a standard hard-coded limit. Option D is incorrect because HPE provides these assessments at no cost to both the partner and the end customer to facilitate the transition to HPE solutions.

A company is going to upgrade a SAP HANA solution. The company is looking for competitive bids, and only SAP HANA hardware that is certified should be included in a bid. When building the bid, what must you first determine before you can right-size the solution with the appropriate HPE hardware?

Options:

IOPS rate

Read cache size

Number of HANA nodes

Replication features

Answer:

AExplanation:

Sizing a storage solution for SAP HANA is fundamentally different from sizing general-purpose virtualization workloads. SAP HANA is an in-memory database, but it has extremely strict requirements for the underlying persistent storage layer to ensure data integrity during savepoints and log writes. SAP enforces these requirements through the SAP HANA Tailored Data Center Integration (TDI) program.

To begin the sizing process and ensure the solution will pass the SAP Hardware Configuration Check Tool (HWCCT) or the newer SAP HANA System Check, a storage architect must first determine the required IOPS rate, specifically for the /hana/data and /hana/log volumes. SAP provides specific KPIs for latency and throughput that must be met. For instance, the log volume requires extremely low-latency writes to handle the sequential redo logs, while the data volume requires high-throughput (MB/s) and specific IOPS to handle asynchronous savepoints.

While the number of nodes (Option C) and replication features (Option D) are important for the overall architecture, they do not dictate the "right-sizing" of the storage performance tier in the same way the IOPS and throughput requirements do. If the storage cannot meet the SAP-certified IOPS and latency thresholds, the entire solution will be unsupported, regardless of how many nodes are present. By identifying the IOPS and throughput needs first, the architect can determine if the customer requires an All-Flash Alletra 9000 or if an Alletra MP configuration with specific drive counts is necessary to provide the required "parallelism" to hit SAP's performance targets.

What is a dependency to keep in mind regarding trunking, cable lengths, and deskew units when calculating RTT for fibre channel Brocade ISLs for optimal performance?

Options:

The shortest ISL is set to a deskew value that depends on the switch hardware platform generation.

Trunks can be a mixture of cable lengths, as long as all cables in the ISL use the same transceiver type.

Deskew units represent the time difference for traffic to travel over a single connection of the ISL.

A 20-meter difference is approximately equal to one deskew unit.

Answer:

DExplanation:

In Brocade Fibre Channel fabrics, ISL Trunking allows multiple physical links to behave as a single logical entity. For this to work efficiently, the switch must synchronize the delivery of frames across all physical links to ensure they arrive in the correct order. This process is managed by the Deskew mechanism.

"Skew" refers to the difference in time it takes for a signal to travel across the different physical cables within a trunk, often caused by slight variations in cable lengths. According to the Brocade Fabric OS Administration Guide, the switch hardware automatically measures these differences and applies "deskew units" to the faster (shorter) links to delay them, effectively matching the speed of the slowest (longest) link in the trunk.

A critical rule in SAN design is the distance limitation between cables in a trunk. While Brocade switches are highly capable of compensating for skew, the maximum supported difference in cable length within a single trunk is usually around 30 meters. For calculation purposes, one deskew unit is approximately equal to 20 meters of cable length. If the physical length difference between the shortest and longest cable exceeds the hardware's deskew buffer capacity (which varies by ASIC generation but is measured against this 20m/unit metric), the trunk will fail to initialize or will experience significant performance degradation. Option A is incorrect because the shortest ISL is usually the baseline, not a variable deskew value. Option B is partially true but misses the physical length constraint which is the "dependency" asked for. Option C is incorrect as the deskew unit represents the difference in time (offset), not the total travel time.

A customer has a mix of applications for VMware VMs, HPE containers, and bare metal solutions. The customer is an early adopter of containers and is already using HPE Ezmeral Runtime Enterprise. They have the following criteria:

The customer runs applications on VMware VMs.

The customer wants to run multiple workloads on bare metal, VMs, and containers.

The customer wants a fully managed hybrid multi-cloud environment.

The customer wants to integrate into DevOps toolchains for immediate productivity.

Which solution will best fit the customer's needs?

Options:

HPE GreenLake for VCF

HPE GreenLake for Microsoft Azure Stack HCI

HPE Private Cloud Business Edition with HPE VME

HPE Private Cloud Enterprise

Answer:

DExplanation:

The customer's requirements specify a need for a fully managed environment that supports a "multi-gen" IT stack, including virtual machines (VMs), containers, and bare metal servers, all while providing a cloud-like operational experience for DevOps. HPE Private Cloud Enterprise (PCE) is the only solution in the portfolio designed specifically to meet all these criteria in a single, integrated managed service.

HPE Private Cloud Enterprise provides an automated, self-service cloud experience for developers and IT operators. It natively supports the provisioning and lifecycle management of bare metal compute resources, which is a key requirement for the customer’s diverse workload environment. For containers, PCE integrates with standard Kubernetes orchestration, and for virtual machines, it supports multiple hypervisors including VMware. A critical differentiator is that PCE is delivered as a managed service, meaning HPE handles the underlying infrastructure management (updates, patching, and health), allowing the customer to focus on application development and DevOps productivity.

Options A and B are focused on specific stacks (VMware Cloud Foundation and Azure Stack HCI, respectively) which do not offer the same native, unified bare-metal management and multi-workload breadth as PCE. HPE Private Cloud Business Edition (Option C) is a self-managed solution intended for smaller-scale VM environments and does not provide the "fully managed" experience or the native bare-metal compute service required by this enterprise customer. PCE’s inclusion of HPE Morpheus Enterprise software further ensures the "DevOps toolchain" integration requirement is met by providing a powerful self-service engine for infrastructure-as-code.

An HPE Partner is designing a disaster recovery architecture based on Zerto. The architecture has two sites: a production site and a disaster recovery (DR) site. Which option best describes the solution when the Extended Journal Copy feature is implemented?

Options:

A Zerto Virtual Manager (ZVM) is installed at each site.

A Virtual Replication Appliance (VRA) is installed on each hypervisor host at each site.

Replica and the compressed journals are stored at the DR site only.

No additional space is needed at the production site.

Extended Journal Copies are always taken from the DR site.

A Zerto Virtual Manager (ZVM) is installed only at the production site.

A Virtual Replication Appliance (VRA) is installed on each hypervisor host at each site.

Replica and the compressed journals are stored at both the production and DR sites.

Additional space is needed at the production site.

Extended Journal Copies are always taken from the DR site.

A Zerto Virtual Manager (ZVM) is installed only at the production site.

A Virtual Replication Appliance (VRA) is installed on each hypervisor host at each site.

Replica and the compressed journals are stored at the DR site only.

No additional space is needed at the production site.

Extended Journal Copies are always taken from the DR site.

A Zerto Virtual Manager (ZVM) is installed at each site.

A Virtual Replication Appliance (VRA) is installed on each hypervisor host at each site.

Replica and the compressed journals are stored at both the production and DR sites.

Additional space is needed at the production site.

Extended Journal Copies are always taken from the production site.

Answer:

AExplanation:

The Zerto architecture for disaster recovery is designed as a scale-out solution that integrates directly into the hypervisor layer. The primary management component is the Zerto Virtual Manager (ZVM), which must be installed at each site (production and recovery) to manage the local resources and coordinate with its peer across the network. Data movement is handled by the Virtual Replication Appliance (VRA), a lightweight virtual machine installed on every hypervisor host where protected VMs reside.

When implementing Extended Journal Copy (formerly known as Long-Term Retention), Zerto leverages its unique Continuous Data Protection (CDP) stream. In a typical disaster recovery scenario, writes are captured at the production site and replicated asynchronously to the DR site. These writes are stored in the DR site journal, which provides a rolling history for short-term recovery. The Extended Journal Copy feature builds upon this by taking data directly from the DR site storage and moving it into a long-term repository. Because the "copies" are derived from the data already present at the recovery location, there is no impact on the production site performance and no requirement for additional storage space at the primary site for backup retention. This "off-host" backup approach eliminates the traditional backup window and ensures that the production environment remains lean while the DR site handles both short-term recovery (seconds to days) and long-term compliance (months to years).

A company bought an HPE StoreOnce solution as part of its data protection solution. The company has various Oracle installations that need to be backed up to StoreOnce. How should the company's administrator best implement the data protection strategy within the HPE StoreOnce user interface (UI)?

Options:

Under Data Services, create a Catalyst Store and select the Oracle RMAN option.

From the System Dashboard, click Catalyst Store then specify the Oracle RMAN option and the respective database server.

From the System Dashboard, click Databases, click Create Library, then specify the Oracle RMAN option and the respective database servers.

Under Data Services, create a Catalyst Store and install the Oracle RMAN plug-in on the Oracle database server.

Answer:

DExplanation:

To protect Oracle databases using HPE StoreOnce, the preferred architectural method is using HPE StoreOnce Catalyst for Oracle RMAN. This integration allows Oracle Database Administrators (DBAs) to manage backups directly from their native RMAN (Recovery Manager) tools while leveraging the deduplication and performance benefits of the StoreOnce appliance.

According to the HPE StoreOnce Catalyst for Oracle RMAN User Guide, the implementation involves two distinct stages: configuration on the StoreOnce appliance and configuration on the database server. First, the storage administrator must log into the StoreOnce UI and, under the Data Services section, navigate to Catalyst. Here, they must create a Catalyst Store. This store acts as the target repository for the backup data. During creation, the administrator sets permissions (client access) to allow the Oracle server to communicate with this specific store.

The second, and crucial, part of the implementation (as noted in Option D) is the installation of the HPE StoreOnce Catalyst Plug-in for Oracle RMAN on the actual Oracle database server. This plug-in provides the "SBT" (System Backup to Tape) interface that RMAN requires to talk to a non-disk/non-tape target. Without this plug-in installed on the host, RMAN has no way of translating its commands into the Catalyst protocol. Once the plug-in is installed and configured with the StoreOnce details, the DBA can allocate channels to the "SBT_TAPE" device and run backup jobs directly to the Catalyst Store created in the UI. Options A, B, and C are incorrect because the StoreOnce UI does not have an "Oracle RMAN option" toggle or "Database Library" creator; the intelligence resides in the combination of the Catalyst Store and the host-side plug-in.

A customer currently has an HPE Alletra 9000 with data reduction on all volumes and plans to migrate to an HPE Alletra MP B10000. Which formula should be used to size the new solution?

Options:

Size to consumption multiplied by 1.25

Size to consumption multiplied by 1.35

Size to consumption multiplied by 1.5

Size to original capacity

Answer:

AExplanation:

When sizing a migration from a highly efficient array like the HPE Alletra 9000 (or Primera) to the next-generation HPE Alletra MP B10000, storage architects must account for the difference between the "Written Capacity" (what the host thinks it has stored) and the "Consumed Capacity" (the physical space used after data reduction).

The standard best practice for an HPE Master ASE when performing these migrations is to Size to consumption multiplied by 1.25. This "1.25 factor" (representing a 25% overhead) is the recommended safety margin used in sizing tools like HPE NinjaStars and the HPE Cloud Physics assessment reports.

This 25% buffer is designed to cover several critical architectural requirements:

System Metadata and Overhead: Both the Alletra 9000 and Alletra MP require physical capacity to store internal metadata, map tables, and the structures required for their respective data reduction engines.

Snapshot Reserve: While snapshots are thin and pointer-based, they still consume physical space as data changes over time. The 1.25 multiplier ensures there is enough "headroom" for typical snapshot retention policies.

Data Reduction Parity: Data reduction ratios (deduplication and compression) can fluctuate based on the specific workload. Sizing exactly to current consumption without a buffer risks an out-of-space condition if the new array's reduction engine handles a specific block pattern slightly differently during the initial ingest.

Operational Performance: SSD-based arrays perform best when they are not "packed" to 100% capacity, as the garbage collection and wear-leveling processes require free blocks to operate efficiently.

Sizing to "original capacity" (Option D) would lead to a massive over-provisioning and wasted cost, as it ignores the benefits of modern data reduction. Option C (1.5) is generally considered overly conservative for modern flash environments, while 1.25 provides the optimal balance of cost-efficiency and technical risk mitigation.

An administrator needs to verify that the HPE plug-in was installed in VMware vCenter to correctly integrate with the HPE Alletra storage array. Use your cursor to place a + where the administrator can click to verify the plug-in installation.

Options:

Answer:

Explanation:

Configure

In a VMware vSphere environment, integrating HPE Alletra storage typically involves the installation of the HPE Storage Peer Motion Utility or the HPE Storage Connection Manager for VMware, which provides the VASA (vSphere APIs for Storage Awareness) provider functionality. This integration allows vCenter to manage storage arrays directly for tasks such as provisioning Virtual Volumes (vVols) and monitoring storage health.

According to the HPE Alletra Integration Guide for VMware, after the plug-in or provider is registered with vCenter, an administrator can verify its status through the vSphere Client UI. As shown in the exhibit (image_64a7b7.png), the administrator is currently viewing the Storage Providers section under the Configure tab of the vCenter object. This specific view is the correct location to verify that the HPE VASA provider is active and communicating. The list under "Storage Provider/Storage System" should show the Alletra array's URL and a status of "Active" or "Online."

If the administrator wants to verify the registration of the UI-based plug-in itself (which adds specific HPE menus and dashboards), they should click the three dots (...) at the end of the navigation bar. This reveals additional hidden tabs where specialized vendor extensions, such as the HPE Storage dashboard, are located. Successful installation is confirmed if the HPE-specific provider is listed with a valid certificate and the "Last Rescan" time is current. Failure to see the provider in this list indicates that the registration process (typically performed via the array's management console or a separate appliance) was not completed successfully, which would prevent the use of advanced features like Storage Policy Based Management (SPBM).

An HPE Partner is talking to a potential customer about the HPE Alletra MP B10000 storage array solution. What is an important feature the partner should share with the customer?

Options:

When writing data into volatile memory, SCM is persistent with batteries.

Stripe sizes vary from 16 (data) + 3 (parity) to 146 (data) + 3 (parity).

If locally decodable EC is implemented by the customer, this will increase rebuild time.

The behavior of common applications can be predicted with Workload Simulator.

Answer:

BExplanation:

The HPE Alletra MP B10000 (Block storage) represents a paradigm shift in HPE's high-end storage strategy by utilizing a modular, disaggregated architecture. One of the most significant technical advantages of this platform is its Advanced Erasure Coding and the way it handles data layout across the disaggregated NVMe capacity.

According to the HPE Alletra MP technical deep-dive documents, the system does not use traditional fixed RAID groups. Instead, it uses a massive, distributed stripe mechanism. The software is capable of varying the stripe width dynamically based on the number of available drives and nodes in the cluster. This allows the system to achieve industry-leading capacity efficiency. Specifically, the system can utilize stripe sizes ranging from a minimum of 16+3 to a maximum of 146+3. This high data-to-parity ratio (e.g., 146 data segments for every 3 parity segments) allows customers to realize significantly higher usable capacity from their raw NVMe investment compared to traditional RAID 6 (6+2 or 8+2) or even typical erasure coding in competitive mid-range arrays.

Option A is technically incorrect because, in the Alletra MP, data is typically committed to persistent NVMe media or SCM (Storage Class Memory) in a way that doesn't rely on legacy battery-backed volatile DRAM in the same manner as older controllers. Option C is incorrect because Locally Decodable Erasure Coding is actually designed to reduce rebuild times by requiring fewer IOPS to reconstruct a missing fragment. Option D, while "Workload Simulator" is a tool used in sizing (NinjaStars), the most "important" architectural feature listed that differentiates the Alletra MP’s efficiency is its unique and massive scaling stripe width.

A company has many applications running on bare metal, as well as on VMs.

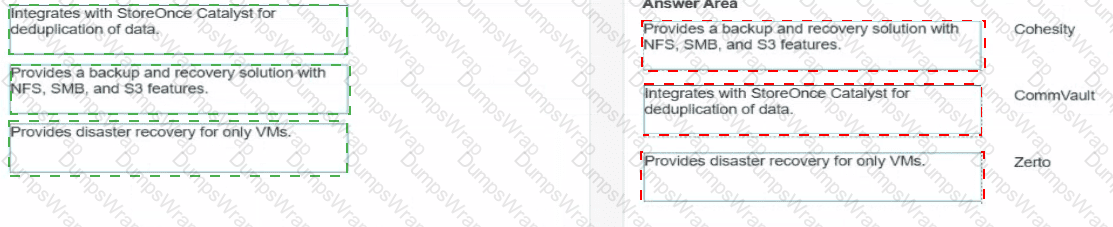

Match the data protection software solution with its description. Each answer will be used once.

Options:

Answer:

Explanation:

Cohesity: Provides a backup and recovery solution with NFS, SMB, and S3 features.

Commvault: Integrates with StoreOnce Catalyst for deduplication of data.

Zerto: Provides disaster recovery for only VMs.

Enterprise data protection requires selecting the right software partner to align with specific infrastructure needs, whether protecting bare-metal servers, virtualized workloads, or modern unstructured data.

Cohesity: This solution is defined by its "multicloud data platform" approach. It is often used to consolidate secondary storage silos by providing a single platform that handles not only backup and recovery but also serves as a scale-out NAS. It natively provides NFS, SMB, and S3 features, allowing it to act as a target for unstructured data while simultaneously protecting applications and VMs.

Commvault: As a long-standing leader in enterprise backup, Commvault features deep, verified integration with HPE hardware. A key differentiator for HPE customers is how Commvault integrates with StoreOnce Catalyst. This integration allows Commvault to manage the movement of deduplicated data directly to StoreOnce appliances without needing to rehydrate the data, significantly reducing network traffic and storage costs across the enterprise.

Zerto: Unlike traditional backup products that rely on snapshots, Zerto utilizes continuous data protection (CDP) through the hypervisor layer. While it is a powerhouse for replication and orchestration, it is architecturally focused on virtualized environments. Within the context of this comparison, it is the solution that provides disaster recovery for only VMs, as its Virtual Replication Appliances (VRAs) are purpose-built to intercept I/O within VMware or Hyper-V environments.